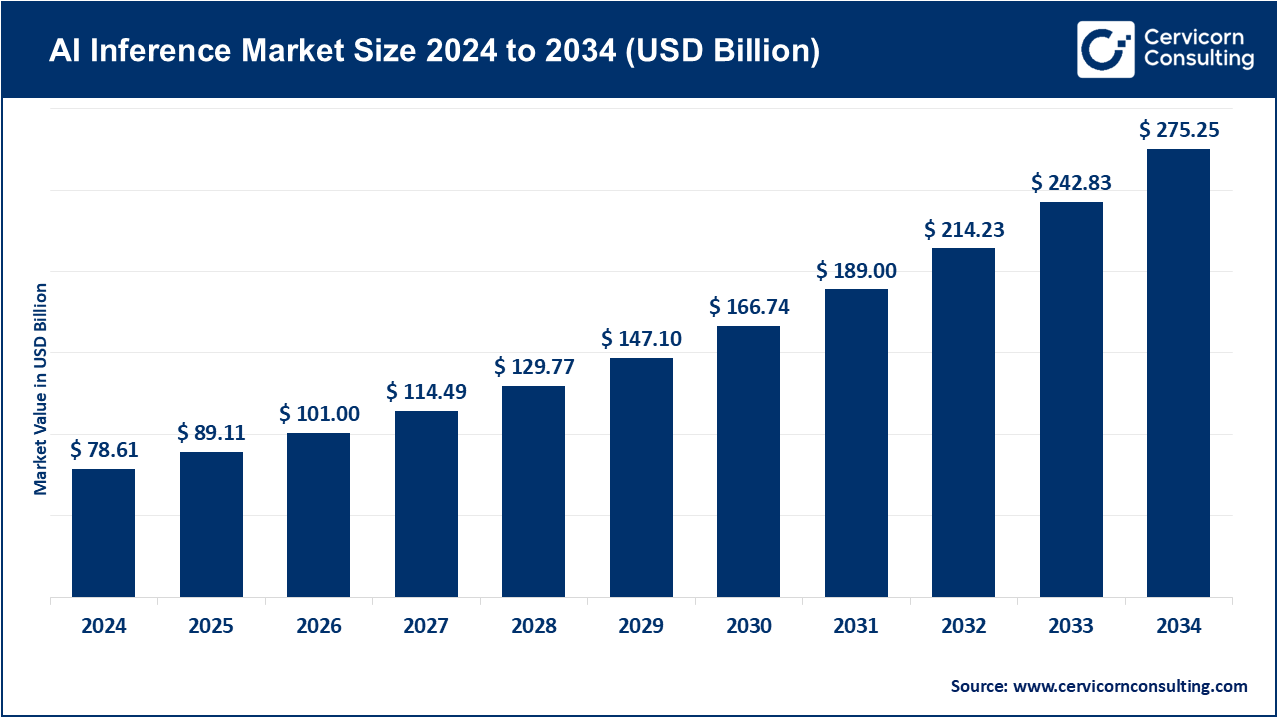

The global AI inference market size was accounted for USD 78.61 billion in 2024 and is expected to be worth around USD 275.25 billion by 2034, growing at a compound annual growth rate (CAGR) of 17.5% over the forecast period 2025 to 2034. The need for scalable, precise, and individualized digital healthcare is transforming markets at unprecedented rates and driving the AI inference market. Healthcare’s accuracy and timeliness is challenged by the rise of chronic disease, biologics, and personalized medicine. Insights for better decision making based on synthesized datasets and analyzed using intelligent analytics. Sensors and intelligent platforms can do the rest, amplifying practitioner productivity using virtual care assistants. Endpoint AI diagnostic systems and edge computing devices exponentially enhance patient engagement, compliance, and care overall.

Predictive analytics and AI systems targeted to minimize specific healthcare ecosystem risks dramatically enhance overall systemic outcomes. Digital transformation enables intelligent augmentation across the care continuum, synergizing patient IoT devices, telehealth systems, homecare, and smart medical equipment. Competitive markets emphasize patient safety, operational workflows, and AI systems sustainability use predictions and risk models to enhance ease of use for the next generation of intelligent medical devices. The rest is amplified by advanced global mobilization and Wi-Fi 6 architecture.

Report Scope

| Area of Focus | Details |

| Market Size in 2025 | USD 89.11 Billion |

| Expected Market Size in 2034 | USD 275.25 Billion |

| Projected CAGR 2025 to 2034 | 17.50% |

| Leading Region | North America |

| Fastest Growing Region | Asia-Pacific |

| Key Segments | Compute, Memory, Network, Deployment, End User, Application, Region |

| Key Companies | Amazon Web Services, Inc., Arm Limited, Advanced Micro Devices, Inc., Google LLC, Intel Corporation, Microsoft, Mythic, NVIDIA Corporation, Qualcomm Technologies, Inc., Sophos Ltd |

The AI inference market is segmented into several key regions: North America, Europe, Asia-Pacific, and LAMEA (Latin America, Middle East, and Africa). Here’s an in-depth look at each region.

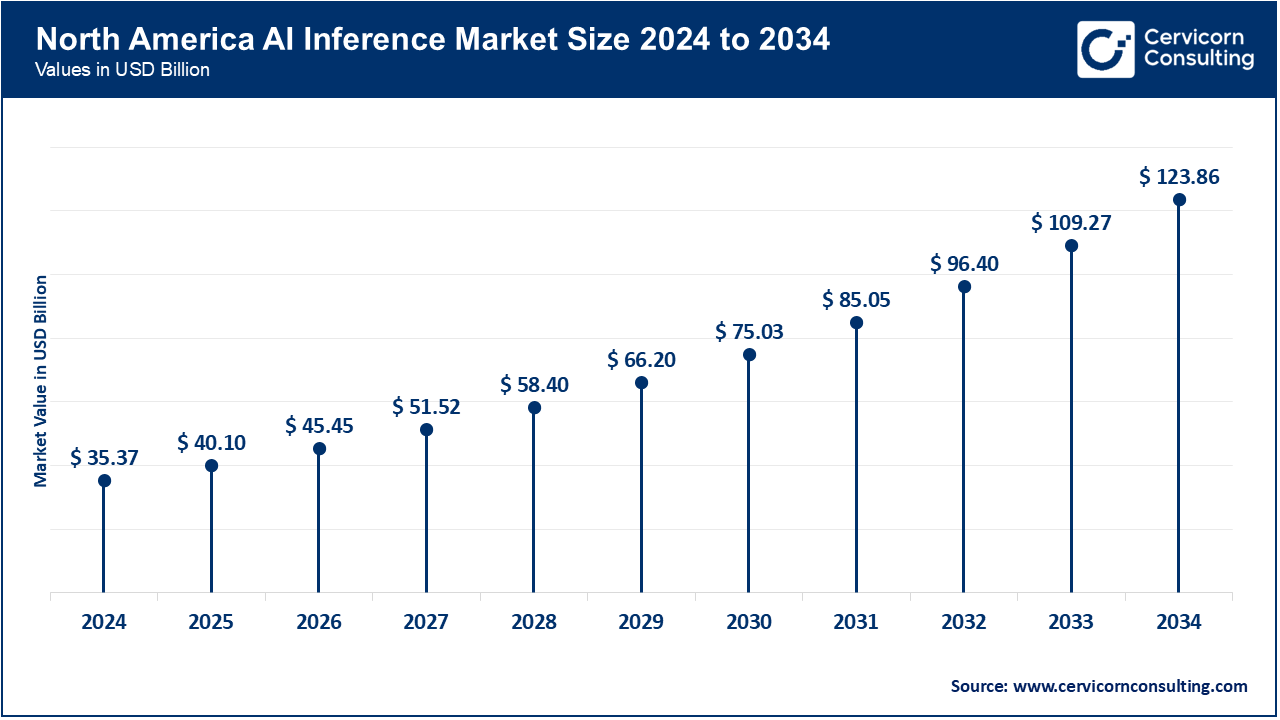

The North America is biggest market because of the developed healthcare system, robust regulatory provisions, and considerable funding on R&D, as well as AI-based innovation. The demand is further driven by the high rate of chronic illnesses and the growing tendency of utilizing home-based, AI-powered healthcare solutions. As an example, in September 2025, NVIDIA rolled out its gen-A100, AI inference-based system in several U.S. hospital networks, allowing oncology and ICU real-time predictive analytics on patient monitoring. This shows that the region is determined to ensure that it incorporates the latest AI in enhancing treatment speed, accuracy, and patient outcomes.

In Europe is experiencing a steady growth following regulatory compliance, sustainability, and powerful digital health ecosystems. Governments and medical facilities are encouraging the use of AI-based systems to conduct diagnostics, patient follow up and individual treatments. In February 2025, Siemens Healthineers introduced its AI inference based imaging platform in some European hospitals to enhance the efficiency and accuracy of the workflow in radiology. Combined with EU-funded projects on digital and environmental-friendly healthcare, Europe is still incorporating AI inference in all hospitals, research facilities, and residential care services.

The fastest-growing region is Asia-Pacific with the help of the rapid urbanization process, the increasing prevalence of chronic diseases, and the rise in investments of governments in digitalization of healthcare. The cities at the level of Tier II and III are experiencing a high rate of the introduction of affordable AI-based devices used in diagnostics and monitoring patients. To give an example, in July 2025, QuidelOrtho presented AI-based rapid immunoassay kits to detect respiratory infections in India and Southeast Asia, which indicates the concern with accessibility, preparedness to outbreaks, and proactive healthcare management in the region.

AI Inference Market Share, By Region, 2024 (%)

| Region | Revenue Share, 2024 (%) |

| North America | 45% |

| Europe | 22% |

| Asia-Pacific | 26% |

| LAMEA | 7% |

LAMEA is an emerging market, and the business is being boosted by the increasing healthcare facilities, vaccines, and new digital health programs. Adoption is not as advanced as it is in more developed areas because of the shortage of infrastructure and workers, which is changing. In June 2025, South African researchers tested AI-based diagnostic systems with mobile health units to perform simultaneous HIV and hepatitis testing, which suggests an attempt at increasing the AI-assisted healthcare system in remote and underserved regions.

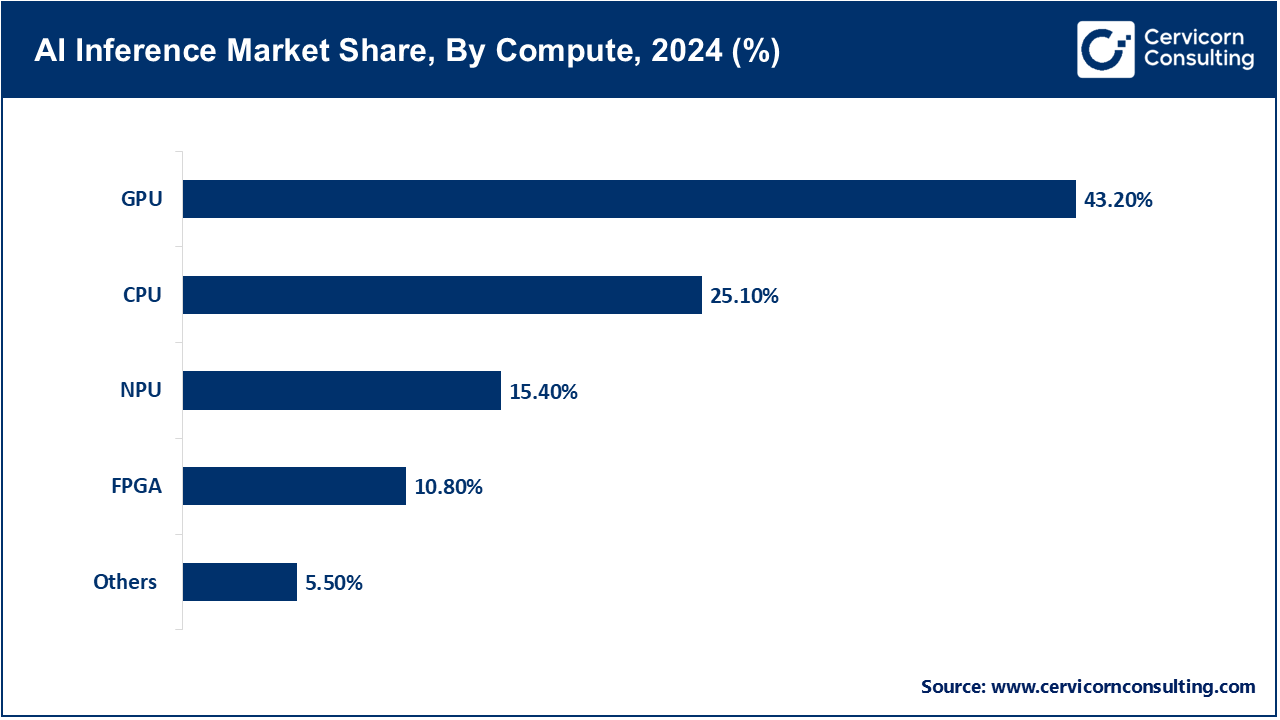

GPU: Because of their capability to process large amounts of data simultaneously, high-performing, and their ability to deep learning models, GPUs have remained the principal resource in the field of AI inference with the advent of generative AI, computer vision and large scale NLP models, there is continued optimization of GPUs for healthcare analytics and real-time predictive systems. NVIDIA, for example, released the A100 GPU for edge and cloud inference in June 2025, allowing hospitals to use GPU for faster AI-based diagnostic and patient monitoring systems. This usecase highlights the growing need for real-time GPU analytics.

CPU: CPUs still perform on less compute intensive inference and general workloads, particularly on clinical data analytics and real-time monitoring systems where latency and power saving features are vitally important. In the example of the April 2025 usecase, Intel's Xeon processors were deployed in the patient data center of the hospital for machine learning inference on patient records and imaging data, emphasizing the continued need for CPU systems.

FPGA: FPGAs are beneficial for customizing resource allocation to AI inference workloads in the healthcare sector to reduce power consumption. FPGAs were integrated in wearable AI Systems for continuous glucose monitoring to allow Xilinx to provide accurate real-time monitoring on a budget.

NPU: Yale researchers have identified neural processing units (NPUs) as having superior device power efficiencies on the order of 10x to 100x greater than GP/TPUs for real-time inference processing on the edge. They enable fast model inference for mobile and IoT healthcare devices. Huawei, for example, demonstrated in August 2025 its NPU-powered smart health monitors, improving cardiovascular risk inference speed for cardiac risk detection in home care settings.

Others: This category encompasses system architectures such as DSPs and ASICs, as well as crossbeam DSP-ASIC hybrid systems, instruction architectures, and workflow/algorithm ‘cusp’ neural nets that have been designed for various specific workloads of AI. The AI compute platform diversity is evidenced by Graphcore’s May 2025 launch of targeted hybrid AI chips for precision medicine, including for oncology treatment modeling and predictive patient outcome analytics.

DDR: As it pertains to system-level AI inference, DDR remains the standard and it supports numerous healthcare functionalities from hospital servers to monitored devices in home care. Its speed and cost effectiveness facilitates its continued usage in clinical settings.

AI Inference Market Share, By Memory, 2024 (%)

| Memory | Revenue Share, 2024 (%) |

| DDR | 38.30% |

| HBM | 61.70% |

HBM: High Bandwidth Memory (HBM) is gaining prominence alongside GPUs and NPUs to assist with the handling of large models and high-throughput data analysis. For instance, In July 2025, AMD marketed GPUs with HBM for real-time imaging and genomics, enabling real-time imaging analysis and processing of genomic data for better diagnostics, an application in supremely robust and precise imaging diagnostics and genomic data processing.

Cloud: A focus on large-scale inference tasks such as population health analytics, telehealth monitoring, and research application favors cloud deployment. In May 2025, AWS and Microsoft Azure expanded healthcare AI services offering HIPAA-compliant inference pipelines to hospitals and research institutes.

On-Premise: On-premise deployments ensure adequate data security and reduced latency, which is essential for patient-sensitive healthcare operations. In healthcare AI, hospitals that deploy AI inference on-premise, especially for radiology and ICU monitoring, gain better responsiveness and data privacy compliance.

AI Inference Market Share, By Deployment, 2024 (%)

| Deployment | Revenue Share, 2024 (%) |

| Cloud | 42.80% |

| On-Premise | 25.70% |

| Edge | 31.50% |

Edge: In IoT health monitors, wearable devices, and point-of-care diagnostics, edge inference is increasingly being adopted. In June 2025, for instance, Philips added edge AI to portable ultrasound devices to enable real-time image interpretation without cloud connection.

Generative AI: Pensil Medicine incorporated drugs AI and automation AI to build drugs for rare diseases and enhance research of pipeline. Besides, augmented medical imaging and personalized therapy planning is also supported by Generative AI. In August 2025, Insilico Medicine, through generative AI inference, constructed novel drug candidates for rare diseases, streamlining the research pipeline.

Machine Learning: Machine Learning is used to stratify patients, predict clinical events, and in other forms of real-time surveillance. In March 2025, GE Healthcare deployed ML models for prediction of patient deterioration in ICU via AI inference, thus, facilitating timely intervention.

NLP: NLP inference is instrumental in assisting automated clinical documentation, voice-enabled diagnostics and analysing patient interaction data. Amazon Comprehend Medical in April 2025 helped hospitals to derive actionable insights for optimizing treatment by analysing unstructured EHR data and providing data driven clinical decision support.

Computer Vision: Computer vision(CV) has a broad application in clinical medicine such as medical imaging, automated analysis of medical images and other forms of pathology, and in the role of a remote monitor for patients. In May 2025, Zebra Medical Vision launched a new line of AI inference powered radiology tools to detect and diagnose early-stage diseases from X-rays and CT scans, thus, catering speed and accuracy in diagnostics.

Market Segmentation

By Compute

By Memory

By Network

By Deployment

By Application

By End Use

By Region

Chapter 1. Market Introduction and Overview

1.1 Market Definition and Scope

1.1.1 Overview of AI Inference

1.1.2 Scope of the Study

1.1.3 Research Timeframe

1.2 Research Methodology and Approach

1.2.1 Methodology Overview

1.2.2 Data Sources and Validation

1.2.3 Key Assumptions and Limitations

Chapter 2. Executive Summary

2.1 Market Highlights and Snapshot

2.2 Key Insights by Segments

2.2.1 By Compute Overview

2.2.2 By Memory Overview

2.2.3 By Network Overview

2.2.4 By Deployment Overview

2.2.5 By Application Overview

2.2.6 By End User Overview

2.3 Competitive Overview

Chapter 3. Global Impact Analysis

3.1 Russia-Ukraine Conflict: Global Market Implications

3.2 Regulatory and Policy Changes Impacting Global Markets

Chapter 4. Market Dynamics and Trends

4.1 Market Dynamics

4.1.1 Market Drivers

4.1.1.1 Increasing Burden of Chronic Disease

4.1.1.2 Movement of Home and Point-of-Care Treatment

4.1.2 Market Restraints

4.1.2.1 Expensive AI Platforms and Biologics Integration

4.1.3 Market Challenges

4.1.3.1 Infrastructure and Accessibility Gaps in New Regions

4.1.4 Market Opportunities

4.1.4.1 The Use of Telemedicine Integrating with Telecare

4.1.4.2 Innovation in Wearable Devices and Biologics

4.2 Market Trends

Chapter 5. Premium Insights and Analysis

5.1 Global AI Inference Market Dynamics, Impact Analysis

5.2 Porter’s Five Forces Analysis

5.2.1 Bargaining Power of Suppliers

5.2.2 Bargaining Power of Buyers

5.2.3 Threat of Substitute Products

5.2.4 Rivalry among Existing Firms

5.2.5 Threat of New Entrants

5.3 PESTEL Analysis

5.4 Value Chain Analysis

5.5 Product Pricing Analysis

5.6 Vendor Landscape

5.6.1 List of Buyers

5.6.2 List of Suppliers

Chapter 6. AI Inference Market, By Compute

6.1 Global AI Inference Market Snapshot, By Compute

6.1.1 Market Revenue (($Billion) and Growth Rate (%), 2022-2034

6.1.1.1 GPU

6.1.1.2 CPU

6.1.1.3 FPGA

6.1.1.4 NPU

6.1.1.5 Others

Chapter 7. AI Inference Market, By Memory

7.1 Global AI Inference Market Snapshot, By Memory

7.1.1 Market Revenue (($Billion) and Growth Rate (%), 2022-2034

7.1.1.1 DDR

7.1.1.2 HBM

Chapter 8. AI Inference Market, By Network

8.1 Global AI Inference Market Snapshot, By Network

8.1.1 Market Revenue (($Billion) and Growth Rate (%), 2022-2034

8.1.1.1 NIC/Network Adapters

8.1.1.2 Interconnect

Chapter 9. AI Inference Market, By Deployment

9.1 Global AI Inference Market Snapshot, By Deployment

9.1.1 Market Revenue (($Billion) and Growth Rate (%), 2022-2034

9.1.1.1 Cloud

9.1.1.2 On-Premise

9.1.1.3 Edge

Chapter 10. AI Inference Market, By Application

10.1 Global AI Inference Market Snapshot, By Application

10.1.1 Market Revenue (($Billion) and Growth Rate (%), 2022-2034

10.1.1.1 Generative AI

10.1.1.2 Machine Learning

10.1.1.3 Natural Language Processing

10.1.1.4 Computer Vision

Chapter 11. AI Inference Market, By End-User

11.1 Global AI Inference Market Snapshot, By End-User

11.1.1 Market Revenue (($Billion) and Growth Rate (%), 2022-2034

11.1.1.1 BFSI

11.1.1.2 Healthcare

11.1.1.3 Retail and E-commerce

11.1.1.4 Automotive

11.1.1.5 IT and Telecommunications

11.1.1.6 Manufacturing

11.1.1.7 Security

11.1.1.8 Others

Chapter 12. AI Inference Market, By Region

12.1 Overview

12.2 AI Inference Market Revenue Share, By Region 2024 (%)

12.3 Global AI Inference Market, By Region

12.3.1 Market Size and Forecast

12.4 North America

12.4.1 North America AI Inference Market Revenue, 2022-2034 ($Billion)

12.4.2 Market Size and Forecast

12.4.3 North America AI Inference Market, By Country

12.4.4 U.S.

12.4.4.1 U.S. AI Inference Market Revenue, 2022-2034 ($Billion)

12.4.4.2 Market Size and Forecast

12.4.4.3 U.S. Market Segmental Analysis

12.4.5 Canada

12.4.5.1 Canada AI Inference Market Revenue, 2022-2034 ($Billion)

12.4.5.2 Market Size and Forecast

12.4.5.3 Canada Market Segmental Analysis

12.4.6 Mexico

12.4.6.1 Mexico AI Inference Market Revenue, 2022-2034 ($Billion)

12.4.6.2 Market Size and Forecast

12.4.6.3 Mexico Market Segmental Analysis

12.5 Europe

12.5.1 Europe AI Inference Market Revenue, 2022-2034 ($Billion)

12.5.2 Market Size and Forecast

12.5.3 Europe AI Inference Market, By Country

12.5.4 UK

12.5.4.1 UK AI Inference Market Revenue, 2022-2034 ($Billion)

12.5.4.2 Market Size and Forecast

12.5.4.3 UKMarket Segmental Analysis

12.5.5 France

12.5.5.1 France AI Inference Market Revenue, 2022-2034 ($Billion)

12.5.5.2 Market Size and Forecast

12.5.5.3 FranceMarket Segmental Analysis

12.5.6 Germany

12.5.6.1 Germany AI Inference Market Revenue, 2022-2034 ($Billion)

12.5.6.2 Market Size and Forecast

12.5.6.3 GermanyMarket Segmental Analysis

12.5.7 Rest of Europe

12.5.7.1 Rest of Europe AI Inference Market Revenue, 2022-2034 ($Billion)

12.5.7.2 Market Size and Forecast

12.5.7.3 Rest of EuropeMarket Segmental Analysis

12.6 Asia Pacific

12.6.1 Asia Pacific AI Inference Market Revenue, 2022-2034 ($Billion)

12.6.2 Market Size and Forecast

12.6.3 Asia Pacific AI Inference Market, By Country

12.6.4 China

12.6.4.1 China AI Inference Market Revenue, 2022-2034 ($Billion)

12.6.4.2 Market Size and Forecast

12.6.4.3 ChinaMarket Segmental Analysis

12.6.5 Japan

12.6.5.1 Japan AI Inference Market Revenue, 2022-2034 ($Billion)

12.6.5.2 Market Size and Forecast

12.6.5.3 JapanMarket Segmental Analysis

12.6.6 India

12.6.6.1 India AI Inference Market Revenue, 2022-2034 ($Billion)

12.6.6.2 Market Size and Forecast

12.6.6.3 IndiaMarket Segmental Analysis

12.6.7 Australia

12.6.7.1 Australia AI Inference Market Revenue, 2022-2034 ($Billion)

12.6.7.2 Market Size and Forecast

12.6.7.3 AustraliaMarket Segmental Analysis

12.6.8 Rest of Asia Pacific

12.6.8.1 Rest of Asia Pacific AI Inference Market Revenue, 2022-2034 ($Billion)

12.6.8.2 Market Size and Forecast

12.6.8.3 Rest of Asia PacificMarket Segmental Analysis

12.7 LAMEA

12.7.1 LAMEA AI Inference Market Revenue, 2022-2034 ($Billion)

12.7.2 Market Size and Forecast

12.7.3 LAMEA AI Inference Market, By Country

12.7.4 GCC

12.7.4.1 GCC AI Inference Market Revenue, 2022-2034 ($Billion)

12.7.4.2 Market Size and Forecast

12.7.4.3 GCCMarket Segmental Analysis

12.7.5 Africa

12.7.5.1 Africa AI Inference Market Revenue, 2022-2034 ($Billion)

12.7.5.2 Market Size and Forecast

12.7.5.3 AfricaMarket Segmental Analysis

12.7.6 Brazil

12.7.6.1 Brazil AI Inference Market Revenue, 2022-2034 ($Billion)

12.7.6.2 Market Size and Forecast

12.7.6.3 BrazilMarket Segmental Analysis

12.7.7 Rest of LAMEA

12.7.7.1 Rest of LAMEA AI Inference Market Revenue, 2022-2034 ($Billion)

12.7.7.2 Market Size and Forecast

12.7.7.3 Rest of LAMEAMarket Segmental Analysis

Chapter 13. Competitive Landscape

13.1 Competitor Strategic Analysis

13.1.1 Top Player Positioning/Market Share Analysis

13.1.2 Top Winning Strategies, By Company, 2022-2024

13.1.3 Competitive Analysis By Revenue, 2022-2024

13.2 Recent Developments by the Market Contributors (2024)

Chapter 14. Company Profiles

14.1 Amazon Web Services, Inc.

14.1.1 Company Snapshot

14.1.2 Company and Business Overview

14.1.3 Financial KPIs

14.1.4 Product/Service Portfolio

14.1.5 Strategic Growth

14.1.6 Global Footprints

14.1.7 Recent Development

14.1.8 SWOT Analysis

14.2 Arm Limited

14.3 Google LLC

14.4 Advanced Micro Devices, Inc.

14.5 Intel Corporation

14.6 Microsoft

14.7 Mythic

14.8 NVIDIA Corporation

14.9 Qualcomm Technologies, Inc.

14.10 Sophos Ltd