Hyperscale Computing Market Size and Growth 2025 to 2034

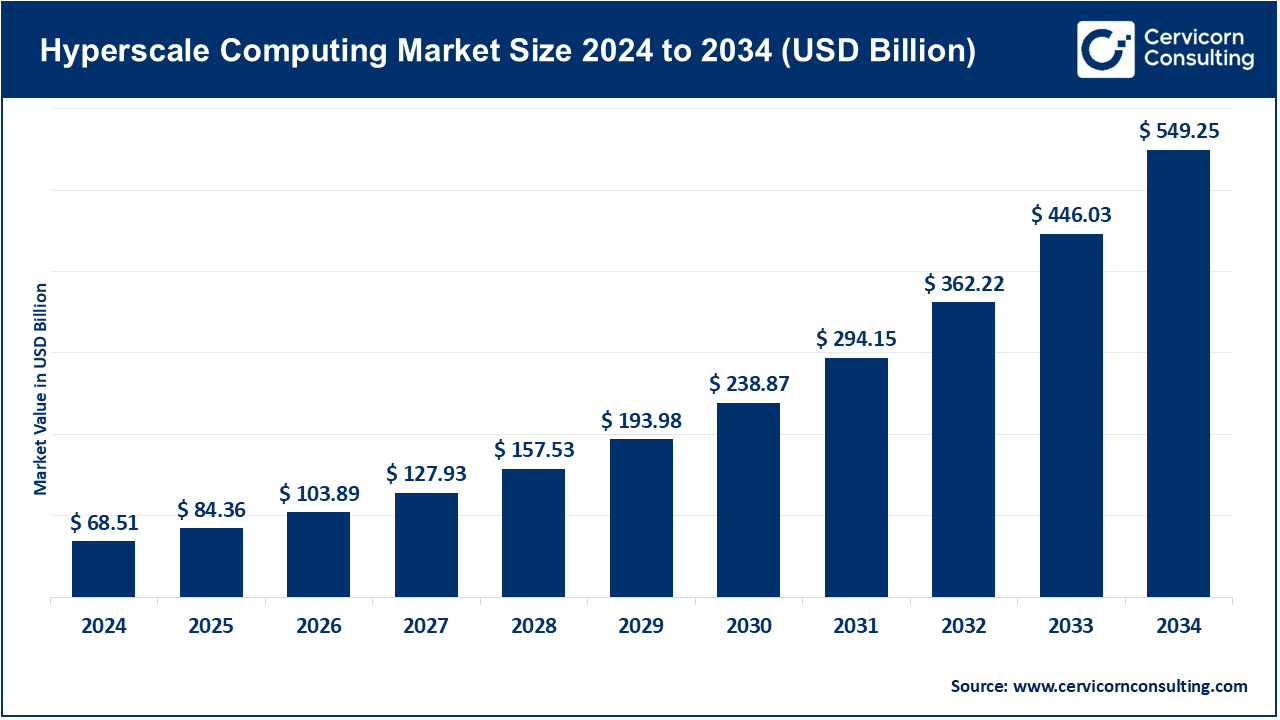

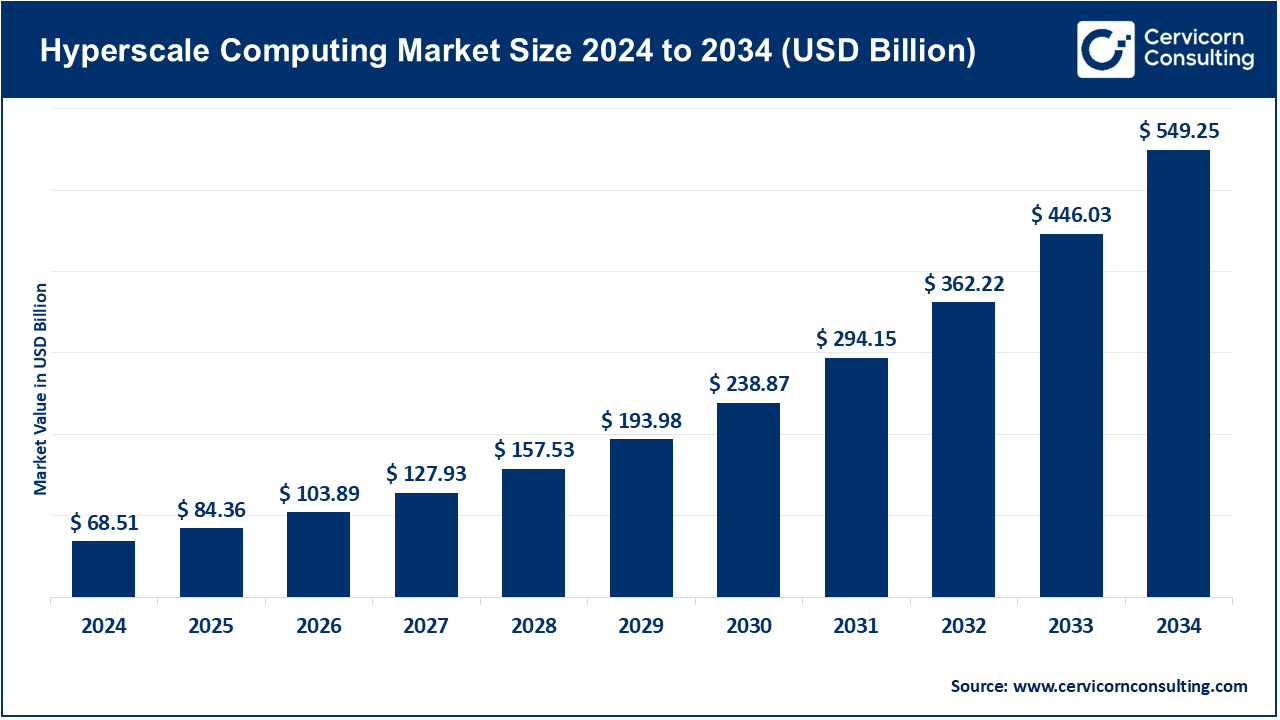

The global hyperscale computing market size was estimated at USD 68.51 billion in 2024 and is expected to surpass around USD 549.25 billion by 2034, growing at a compound annual growth rate (CAGR) of 23.14% over the forecast period from 2025 to 2034.

The use of hyperscale computing infrastructure has significantly grown from cloud services due to its flexibility and cost-efficiency. Cloud service providers are now able to utilize their resources with ease due to the backbone that hyperscale infrastructure provides, which allows for the seamless switch to virtual systems. As businesses continue with their digital transformation initiatives, getting new cloud-based applications, managing data streams, and deploying artificial intelligence (AI) models is increasingly predicated on the usage of cloud infrastructure. In 2024, the cloud services market will reach 94% adoption from enterprises, with 67% shifting towards hybrid or multi-cloud implementations for improved flexibility and lower costs. AWS, Microsoft Azure, and Google Cloud, with 31%, 25%, and 11% market shares, respectively, public clouds run over 1,000 hyperscale data centers servicing the finance, healthcare, and other industries on a global scale. SaaS applications are projected to account for 70% of public cloud revenue expanding with Salesforce and Zoom servicing tens of millions of users concurrently. Hyperscale infrastructure holds significance in the AI industry as well, servicing workloads like training GPT-4, which demands thousands of GPUs and multi-petabyte storage at constant access.

What is hyperscale computing?

Hyperscale computing refers to the design and operation of IT environments that can expand and contract seamlessly to match workload fluctuations. Such systems emphasize a distributed model wherein tens of thousands of physical and abstract servers operate under a common orchestration framework to deliver economical, data-rich processing, extensive object storage, and resilient, high-bandwidth networking. This model underpins cloud computing and big data analytics as well as Artificial Intelligence (AI) and Internet of Things (IoT) ecosystems. Cloud adoption, enterprise-wide digital transformation, and shifts within the organization to process real-time data at an unprecedented scale are the primary drivers of this change. Organizations in banking, retail, healthcare, media, and other industries are utilizing hyperscale technologies to enable mission-critical applications, improve operational efficiencies, elevate user satisfaction, and lower IT expenditures.

IT Spending in USD Billion, 2024

| Country |

IT Spending, USD Billion, 2024 |

| United States |

1350 |

| China |

465 |

| Japan |

203 |

| Germany |

149 |

| United Kingdom |

135 |

Hyperscale Computing Market Report Highlights

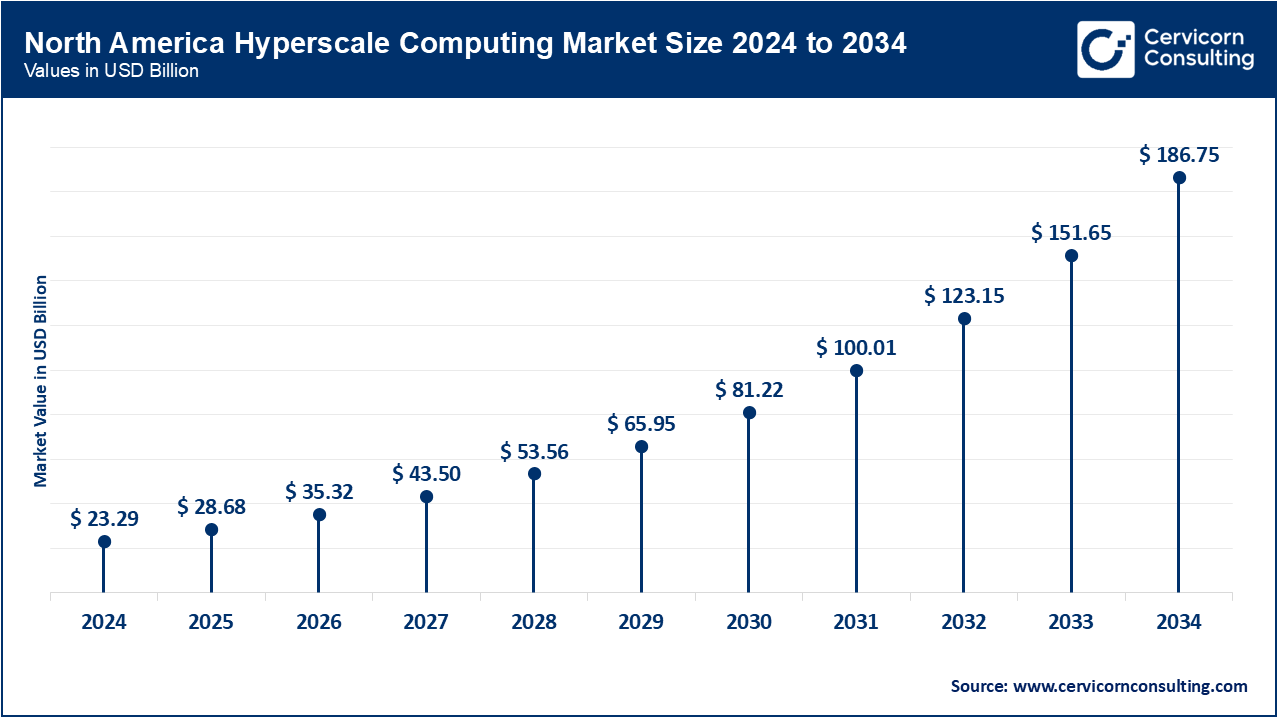

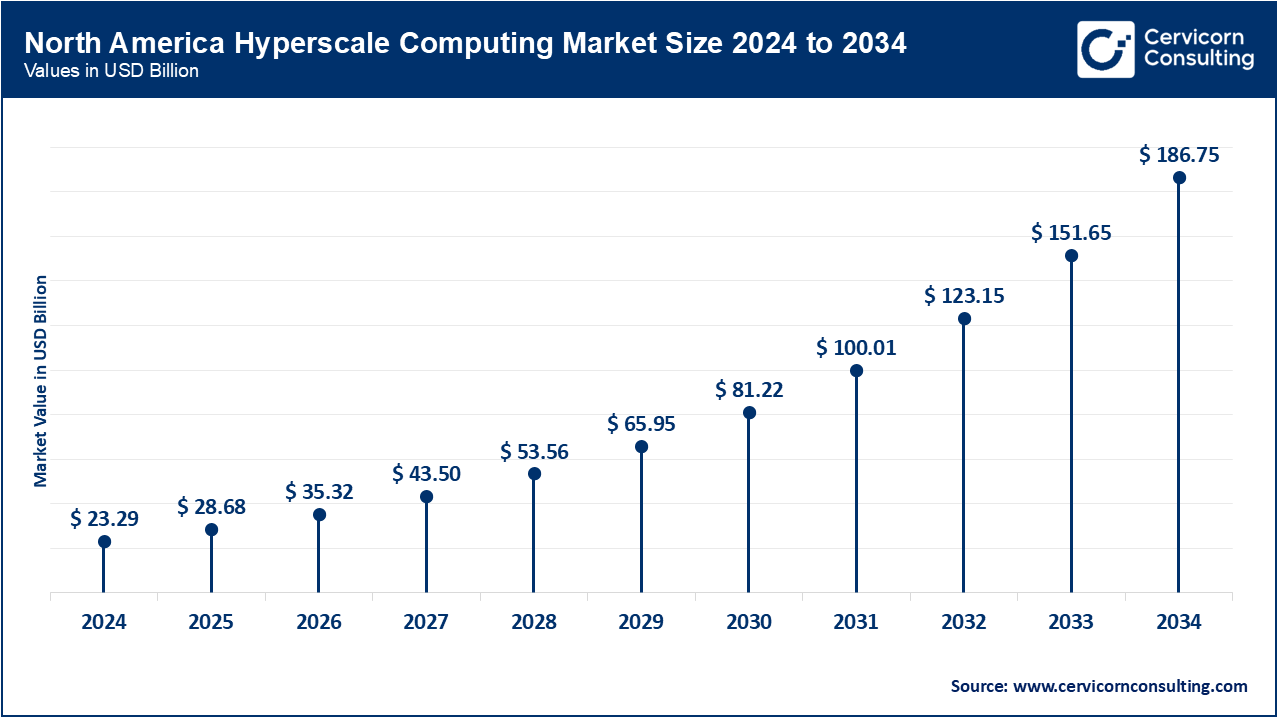

- By region, North America dominated the market with a share of 34% in 2024 and is anticipated to expand at a CAGR of over 20% over the forecast period. Factors such as high research & development (R&D) expenditure, established IT services market, and the presence of numerous cloud service providers are driving the market growth in the region.

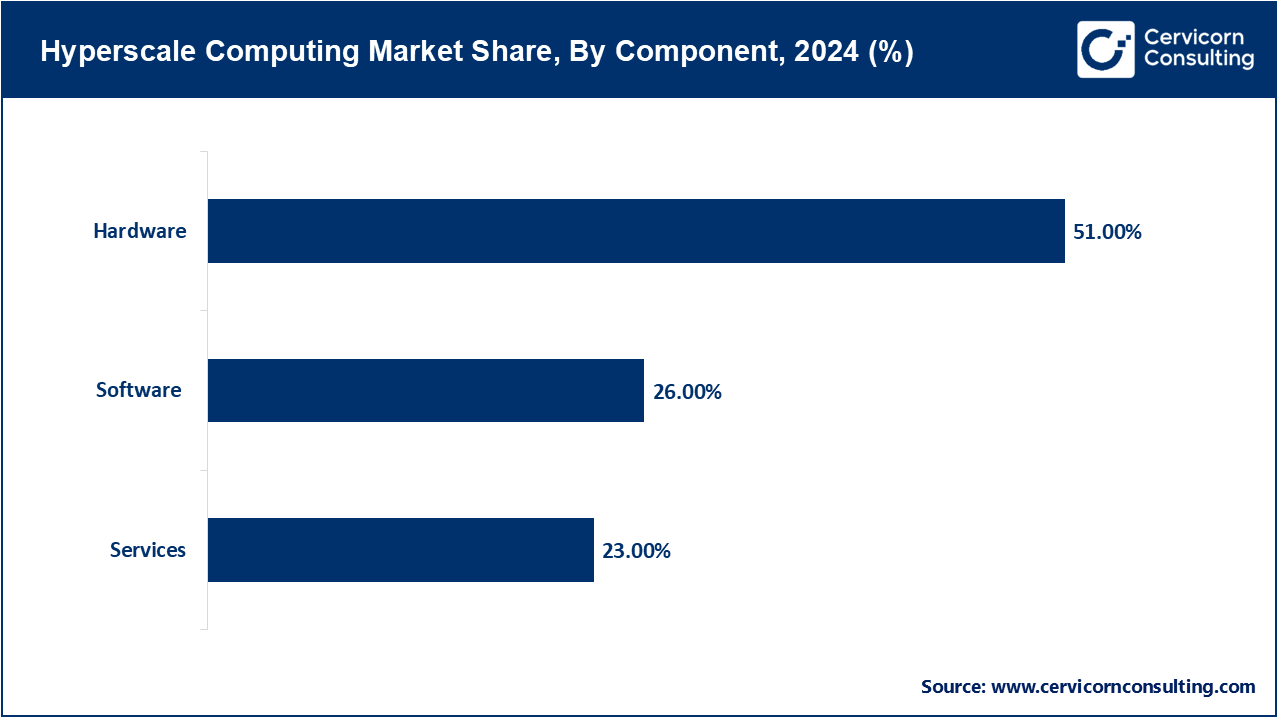

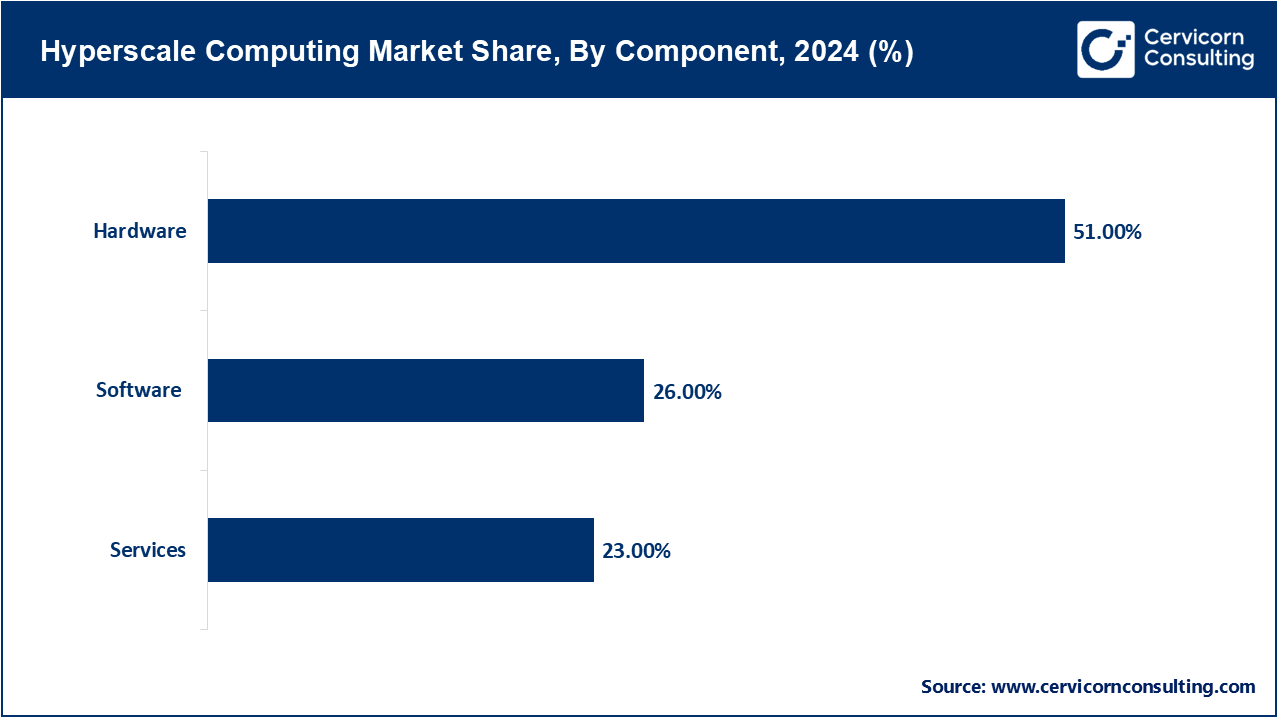

- By Component, the hardware segment has recorded 51% of the total revenue share in 2024.

- By Component, the services segment is anticipated to grow at a CAGR of 23% fueled by the rising demand for system integration and managed services.

- By End User, both the BFSI and healthcare industries are expanding at a significant rate, with a CAGR of over 20%, because of increased adoption of data-driven processes and digital transformation initiatives.

- By Deployment Type, the cloud-based remains the market share leader with more than 60% share due to its scalability and cost-efficient operations.

- Hybrid cloud is accelerating more than any other segment in regulated industries such as government and healthcare, growing at over 21% CAGR.

- By Enterprise Size, the large enterprises segment has generated 70.40% of revenue share in 2024.

- By Application, the cloud computing segment accounted for a revenue share of 45% in 2024.

Hyperscale Computing Market Trends

- Integration of AI and Machine Learning in Hyperscale Data Centers: The integration of AI and ML into hyperscale data centers serves as a primary market mover for the hyperscale computing industry. Not only is it improving new and existing workflows, the integration is also indulging predictive management, intelligent automation and real-time optimization at resource level for all infrastructure layers. Over 60% of hyperscale data centers are expected to deploy AI and ML in the next year, with AI and ML driven predictive maintenance cutting down component failure rate efficiencies by 30%. AI workloads and hyperscale infrastructures, with the integration of GPU clusters and AI accelerators, are expected to lower silicon utilization by 20-25% by using intelligent workload and cooling optimization. Google is also known for enhancing the performance of their AI workloads and training large scale language models. Their data centers using DeepMind’s AI for cooling optimization saves 40% on energy costs during peak computational performance.

- Shift Toward Sustainable and Energy-Efficient Hyperscale Infrastructure: Adopting more energy-efficient practices is an important trend towards achieving sustainable hyperscale infrastructures. As data centers are seeing a rapid growth in demand on a global scale, this has set new restrictions on how eco-friendly these hyper-scale operators and cloud service providers can be. Due to a great increase in scale, the power consumption of hyper-scale servers greatly rises, often requiring as much power as small towns. In the modern day, as more people are advocating for environmental well-becoming, these operators need to be more proactive towards establishing a greener carbon footprint. Leaders like Amazon (AWS), Microsoft, and Google have set goals to become carbon negative in the next 10 years, achieving 100% renewable energy, carbon neutrality, and gaining carbon negativity. To power their data centers, these organizations are developing solar farms and investing in wind and hydroelectric energy.

Hyperscale Computing Market Dynamics

Market Drivers

- Surge in Cloud Services and Data Explosion Across Industries: The rapid expansion of cloud services, coupled with a data explosion across industries, is one of the most pivotal drivers of growth for the hyperscale computing market. Alongside the ongoing digitization of business processes, the adoption of e-commerce, IoT devices, social media, and smart technologies has led to a dramatic increase in the volume of data available globally. As per industry estimates, the world generated over 120 zettabytes in 2023, a figure projected to double within the next few years. This surge creates a demand for infrastructure capable of storing, processing, and analyzing massive datasets in real time with speed and low latency — capabilities provided by hyperscale computing. Hyperscale data centers are equipped with thousands of servers and distributed storage systems, and thus are designed to provide the requisite high-capacity computing environments for big data management. The world’s data production surpassed 120 zettabytes in 2023, and now sensors, mobile devices and enterprise data applications are generating data from all over the globe at a rate more than 2.5 quintillion bytes daily. Real-time big data analytics in e-commerce, social media, and Internet of Things (IoT) is possible due to hyper-scale data analytics which control tens of thousands of big servers as well as petabyte-scale datasets.

- Growing Demand for High-Performance Computing in AI, IoT, and Analytics: The Intensifying Pursuit of HPC Capacity—The rapid uptake of Artificial Intelligence, the Internet of Things, and sophisticated, latency-sensitive analytic workflows is the overriding force propelling hyperscale computing beyond prior growth trajectories. These domains generate data streams of unprecedented breadth and temporal density, necessitating a computational tier that is not only dense and elastic but also able to orchestrate extraordinarily intricate and resource-bound computations. Real-time AI inference needs a latency of under 1 ms and requires enormous amounts of computation. Scaling neural networks like GPT-4 requires more than 25,000 GPUs and takes weeks to sustain. Hyper-scale architectures with millions of elastic VCPUs easily exceed data throughput of enterprise data centers.

Market Restraints

- High Capital Expenditure and Operational Costs: Significant Capital Investment and Operational Expenses - One of the glaring challenges to adapting hyperscale computing is the immense capital expenditure (CAPEX) pertaining to construction and maintenance of hyperscale data centers. To encourage the use of more efficient technologies, spending on cooling that takes up 40% of the total energy consumption on traditional cooling designs must be improved. The construction of a hyperscale infrastructure not only requires physical servers, but also comes with exorbitant demands for networking equipment, cooling systems, high-density storage components, real estate, and sophisticated security hardware, which translates to enormous upfront funding.

- Growing Data Privacy, Security, and Regulatory Challenges: Hyperscale computing encompasses the management and custodianship of enormous quantities of sensitive data—spanning personal identification, financial transactions, proprietary intellectual property, and enterprise-level strategic analytics—rendering it an attractive target for malicious cyber actors. Despite robust security measures, high-profile data breaches involving major cloud providers have raised questions about the safety of centralized hyperscale systems. Attack vectors such as ransomware, insider threats, phishing, and Distributed Denial of Service (DDoS) attacks are becoming more sophisticated, posing a constant risk to both cloud providers and their enterprise clients. As an example, global cybercrime damages were reported to be $11.5 trillion with ransomware attacks climbing up to 105% in 2023, targeting hyper-scale cloud providers that manage sensitive data. Complex security does not solve the problem as reported by 43% of organizations that faced at least one cloud data breach, thus, revealing nonstop weaknesses of hyper-scale centralized infrastructures.

Market Opportunities

- Rapid Growth in Edge Computing and IoT Integration: As organizational digital transfiguration gathers momentum, an expanding array of devices—ranging from industrial telemetry modules to consumer-facing smart appliances—produces and occasionally analyses data locally, thereby fringing the traditional network perimeter. This distributed operational architecture necessitates hyperscale platforms capable of ingesting, normalizing, and conducting batch and stream analytics upon geographically dispersed data lakes. For instance, by the year 2024, there will most likely be over 30 billion IoT (Internet of Things) connected devices, all integrating the network edge where data will be processed and analyzed right in the components which capture and generate the data. The adoption of Edge Computing is expected to increase by a rate of 35% per year. Unlike cloud-only models, Edge Computing reduces latency by as much as 50% during the use of hyperscale data centers, which in turn, offer cloud storage and compute capacities for complex analytics and machine learning on data distributed over the multiple datasets.

- Digital Transformation Across Emerging Economies: A parallel growth vector resides within the digital maturation of developing and transitional economies across the Asia-Pacific, Latin America, the Middle East, and sub-Saharan Africa. As governments and enterprises in these regions boost investment in digital infrastructure, public-cloud frameworks, and the suite of smart technologies, the resultant rise in demand is for computing architectures that scale, operate with efficiency, and offer assured reliability. Hyperscale computing, with its capacity for elastic resource provisioning and high-density design, is the frontrunner in satisfying this requirement. The Asia-Pacific, Latin America, and African regions are now emerging markets and are expected to increase the cloud infrastructure in the regions by 15% per year because of the developing e-governance, digital payment systems, and telehealth services. These regions are greatly supported by hyperscale computing, whereby elastic scaling and 99.99% uptime guarantees are being offered. These countries are rapidly digitalizing and put a lot of demand for hyper low latency under expansion which edge cloud computing can offer.

Market Challenges

- Energy Consumption and Sustainability Concerns: A concurrent and acute challenge for the hyperscale ecosystem is the escalating electric demand of mega facilities, which poses material environmental, regulatory, and cost management dilemmas. The very scale that confers competitive advantage—a concentration of tens of thousands of servers operating in synchronized capacity—also amplifies the absolute energy draw, leading to significant carbon emissions and increasing exposure to volatile energy prices. The escalating scale of hyperscale deployments yields substantial power burdens, including both IT loads and ancillary systems such as cooling, storage, and networking. Current assessments indicate that worldwide data center consumption accounts for approximately 1 to 2 % of total electricity use, with hyperscale facilities representing the most significant fraction of that aggregate. Prospective growth in capacity—motivated by artificial intelligence workloads, the rollout of 5G networks, edge-computing proliferation, and the expansive Internet of Things—suggests that aggregate power draw will increase in a nearly exponential trajectory. For example, as of 2023, datacenters are expected to use about 1.5% of global electricity, with the hyper-scale centers expected to consume the most due to large server densities. While there have been improvements on the use of renewable energy and the cooling systems, many of these centers operate on grids with over 60% of fossil fuel energy which is a setback on trying to reduce the carbon footprints because of AI and 5G workloads.

- Infrastructure Complexity and Skill Shortages: Infrastructure Difficulty and Workforce Gaps - One of the most pressing issues in the hyperscale computing industry is the lack of qualified IT specialists to manage and optimize these environments, coupled with the intricacy surrounding infrastructure management. Beyond just server racks, hyperscale systems integrate complex networking, storage and virtualization, security, as well as orchestration components which need to function together. The large scale deployment of hyperscale computing requires very advanced and specialized knowledge in cloud-native architecture, DevOps, container orchestration (Kubernetes), distributed systems, and cybersecurity. Unfortunately, a global shortfall of practitioners means there is no availability of professionals with these skill sets. This issue is further compounded in developing countries where there is a lack of focus on training and education in hyperscale technologies. As many as 90% of companies are projected to face acute shortages of IT professionals by 2026, an IDC study showed, leading to an estimated 5.5 trillion dollars in losses from a downturn in revenue, deployments, and overall business competitiveness.

Hyperscale Computing Market Segmental Analysis

Component Analysis

Hardware: Hardware serves as the backbone of hyperscale computing infrastructure as it supports massive workloads, high-performance computing technologies, and rapid data transfers. Custom servers and interconnects, SSD arrays and NVMe storages, cooling technologies, as well as GPUs and CPUs of x86 or ARM architectures are key components. Hyper scale data centers have a keen emphasis towards performance per watt and energy efficiency. Also, the density of the computing units motivates the vendors to come up with innovations in regard to liquid cooling, modular racks, and energy efficient processors. Amazon and Google are already taking great leaps to identify and solve these problems, as their TPU and Graviton3 processors show a 15–30% and 25% increase in performance, respectively, in comparison to previous models.

Software: In hyperscale environments, software is defined as automation apparatus, resource management systems, hypervisors, container runtimes (Docker, CRI-O), and orchestration platforms. Within AI operations, firms leveraging TensorFlow and PyTorch stand out, and PyTorch’s adoption in corporate enterprises has grown from 36% in 2020 to more than 75% in 2024, mostly because of its ease in transitioning from research to production. It also encompasses distributed file systems such as Hadoop HDFS, AI frameworks including TensorFlow and PyTorch, as well as control planes for multi-cloud orchestration. Software systems also determine the virtualization, automation, and monitoring efficiency of a given hyperscale infrastructure. The backbone of hyperscale automation relies on software such as Kubernetes, OpenStack, and other hyperscaler proprietary platforms.

Services: Professional services include deployment consulting, system integration, performance tuning, security auditing, and management services. These services are essential for monitoring system usage, mitigating system downtime, and improving cyber defense systems.

Enterprise Size Analysis

Based on enterprise size, the market is classified into large enterprises and SMEs. The large enterprises segment has dominated the market in 2024.

Hyperscale Computing Market Revenue Share, By Enterprise Size, 2024 (%)

| Enterprise Size |

Revenue Share, 2024 (%) |

| Large Enterprises |

70.40% |

| SMEs |

29.60% |

End-User Analysis

Cloud Providers: Cloud providers constitute the primary builders and utilizers of hyperscale infrastructure. Companies like AWS, Google Cloud, Microsoft Azure, Oracle Cloud, and Alibaba Cloud operate thousands of hyperscale data centers around the world. AWS alone holds a 32% share of the global cloud market, with Azure and Google Cloud coming in at 23% and 11%, respectively, with all three serving millions of clients in diverse sectors. Their expansion increases the need for advanced networking, AI infrastructure, and global content delivery. To support millions of customers, run massive virtual workloads, and dynamically scale services, cloud providers adopt hyperscale computing. Innovations developed internally include custom silicon (TPUs, Graviton), zonal fault tolerance, and energy-efficient cooling.

Government & Defence: Governments deploy hyperscale computing for surveillance, intelligence processing, e-governance, and scientific simulations. Defence agencies utilize high-speed computing for real-time analytics, cybersecurity operations, cryptographic modeling, and simulations of various battle scenarios. Due to data sovereignty and sensitivity, many agencies resort to on-premise or government-private deployments, like the DoD's Joint Warfighting Cloud Capability (JWCC). Take, for instance, the multi cloud contract from the U.S. Defense Department for JWCC, awarded in 2022, with AWS, Microsoft, Google, and Oracle. It’s a $9 billion multi-cloud contract to furnish secure, high-capacity compute resources across classified, secret, and unclassified domains. In the same fashion, the EU is spending €2 billion with AWS to develop exascale systems for AI-driven research, climate modeling, and defense.

BFSI: Hyperscale Technology is used by banks and insurers for fraud detection, customer analytics, algorithmic trading, real-time risk analysis, as well as for post-event compliance reporting. Cases such as these and many others within the BFSI sector necessitate the use of low-latency, always-on, and high-compliance systems which in turn fuels the need for hyperscale-grade computing.

Others: Complementary to public cloud environments, hyperscale paradigms now permeate education—exemplified by asynchronous MOOC infrastructures—advanced manufacturing that employs real-time analytics of digital twins and predictive maintenance, retail sectors utilizing microservices for demand forecasting and personalized recommendation engines, and media houses delivering adaptive OTT streaming and latency-sensitive video rendering.

Deployment Type Analysis

On-Premise: The on-premise deployment model is mostly utilized by tech giants, operations telecom, or major financial players who desire full infrastructure control. Customers in this category tend to prioritize ultra-low latency, regulatory compliance, and cost optimization over time. On-Premise hyperscale is expensive to establish, but enhances privacy and data localization thru tailored workloads. On-premise hyperscale campuses are built by Meta (formerly known as Facebook), Apple, and Baidu. These projects do face some obstacles like a lack of skilled workers, long setup times of 24–36 months, and overall running costs like cooling and energy management. One large scale site has the potential to consume enough energy to power 80 thousand U.S. homes in a year.

Hyperscale Computing Market Revenue Share, By Deployment Type, 2024 (%)

| Deployment Type |

Revenue Share, 2024 (%) |

| On-Premise |

40% |

| Cloud-Based |

60% |

Cloud-Based: In 2024, cloud-based deployments made up more than 70% of all hyperscale computing revenue, supplemented by the need for flexibility, global access, pay-as-you-go pricing, and automatic backup systems. Public cloud vendors are used by all scales of businesses to access hyperscale capabilities without necessitating physical infrastructure. Important factors also include the ability to scale, geographical reach, pay-as-you-go pricing, and backup options. Global SaaS distribution, intensive workloads, big data analytics, and AI model training all benefit from this structure.

Hyperscale Computing Market Regional Analysis

Why does North America dominate the hyperscale computing market?

- The North America hyperscale computing market size was valued at USD 23.29 billion in 2024 and is expected to reach around USD 186.75 billion by 2034.

The U.S. dominated region holds the largest number of hyper-scaled computing. AWS, Microsoft, Google, and Meta, outputting massive workloads, lead the construction of new centers expanding the region. According to recent estimates, North America leads the market and has over 34% share of operational hyperscale data centers worldwide as of 2024. The United States also leads in AI 5G rollout developments and clean energy-based data center infrastructure. The region’s policies, workforce, and mature connectivity infrastructure foster innovation and investment in hyperscale technologies. Due to its climate and renewable energy resources, Canada is emerging as a secondary hub. North America has also high cloud adoption in BFSI, government, and healthcare sectors.

Why is Asia-Pacific the fastest-growing region in the hyperscale computing market?

- The Asia-Pacific hyperscale computing market size was reached at USD 17.81 billion in 2024 and is forecasted to grow around USD 142.81 billion by 2034.

The Asia-Pacific (APAC) region is currently the fastest-growing market, with leaders including South Korea, Australia, India, and China, alongside advanced technology hubs such as Japan and rapidly digitalizing nations like Indonesia and Vietnam. The region is expected to have large-scale investments in smart cities and 5G infrastructure, further digitizing the nation, driving a 20-25% CAGR over the forecast period. In addition to the rapid growth of the region with smart cities and digitization programs, there is also a focus on local cloud suppliers cut government and policy backed controlling frameworks like India’s data localization policies. Strong support from the government along with the rising adoption of the digital economy fuels increased internet accessibility make for a unique mix of growth factors.

Hyperscale Computing Market Revenue Share, By Region, 2024 (%)

| Region |

Revenue Share, 2024 (%) |

| North America |

34% |

| Europe |

30% |

| Asia-Pacific |

26% |

| LAMEA |

10% |

What are the driving factors of Europe hyperscale computing market?

- The Europe hyperscale computing market size was accounted for USD 20.55 billion in 2024 and is projected to hit around USD 164.78 billion by 2034.

Europe is Known for its strict regulatory supervision, the region has witnessed GDPR shape hyperscale operations greatly. Europe possesses strong digital infrastructure, especially Germany, UK, France and the Nordics. There is an increasing attention to green hyperscale data centers that utilize renewable energy sources and liquid cooling systems. Projects like Gaia-X are intended to construct sovereign European cloud alternatives. Adoption is strong in automotive, manufacturing, BFSI, and health tech. Digital sovereignty and energy concerns are leading to innovative solutions in the region's hyperscale architecture. The explosive growth in hyperscale computing is primarily driven by big data analytics and artificial intelligence (AI). These solutions call for exceptional processing potential, real-time scalability, memory, and many more features, all of which are obtainable within hyperscale computing environments. The European hyperscale market is expected to operate at a CAGR of 18% through 2030, fueled by AI applications for deep learning, autonomous systems, medical systems, and fraud detection which require massive compute density and need to be scaled in real-time.

LAMEA strategically located frontier market for hyperscale computing

- The LAMEA hyperscale computing market was valued at USD 6.85 billion in 2024 and is anticipated to reach around USD 54.93 billion by 2034.

LAMEA serves as a strategically located frontier market. It also offers unutilized potential in areas with increasing digital penetration and favorable government policies. In Latin America, the region's hyperscale leaders are Mexico and Brazil, with Brazil dominating the region with over 40% of their hyperscale capacity. Mexico and Brazil are supported by booming eCommerce, digitalized retail, streaming, and fintech. Recently, São Paulo's AWS cloud region expansion was announced which comes along with a $1.35 billion investment. Likewise, Microsoft is strengthening their data center presence with $1.1 billion investment in Mexico. The Middle East centres efforts in the United Arab Emirates and Saudi Arabia, which are funding hyper-scale data-centre clusters to support smart-city initiatives.

Hyperscale Computing Market Top Companies

Recent Developments

- July 2025: Broadcom rolled out ultra-low latency (≈250 ns) and 51.2 Tbps throughput Tomahawk Ultra, one of the most powerful Ethernet switches for AI and HPC clusters. It allows open-standard, lossless networking and can bind hundreds of chips, challenging Nvida’s InfiniBand monopoly.

- July 2025: Mark Zuckerberg announced plans for a 1 GW “Prometheus” cluster going live in 2026, with “Hyperion” targeting up to 5 GW over several years, envisioning a massive fabric of tent-assisted datacenter GPUs for rapid AI compute scaling.

- July 2025: The UK activated Isambard-AI in Bristol, a Nvidia powered 5400 chip AI supercomputer ranking 11 in the world and one of the greenest.

- This represents the beginning of the EU 1 billion investment in national AI infrastructure intended to increase public compute capacity twenty-fold by the year 2030.

Market Segmentation

By Component

- Hardware

- Software

- Services

By Deployment Type

By Enterprise Size

By Application

- Cloud Computing

- Big Data

- IoT

- Others

By End-User

- Cloud Providers

- Government & Defence

- BFSI

- Retail & E-commerce

- Healthcare

- Other

By Region

- North America

- APAC

- Europe

- LAMEA